Rows: 3,757

Columns: 8

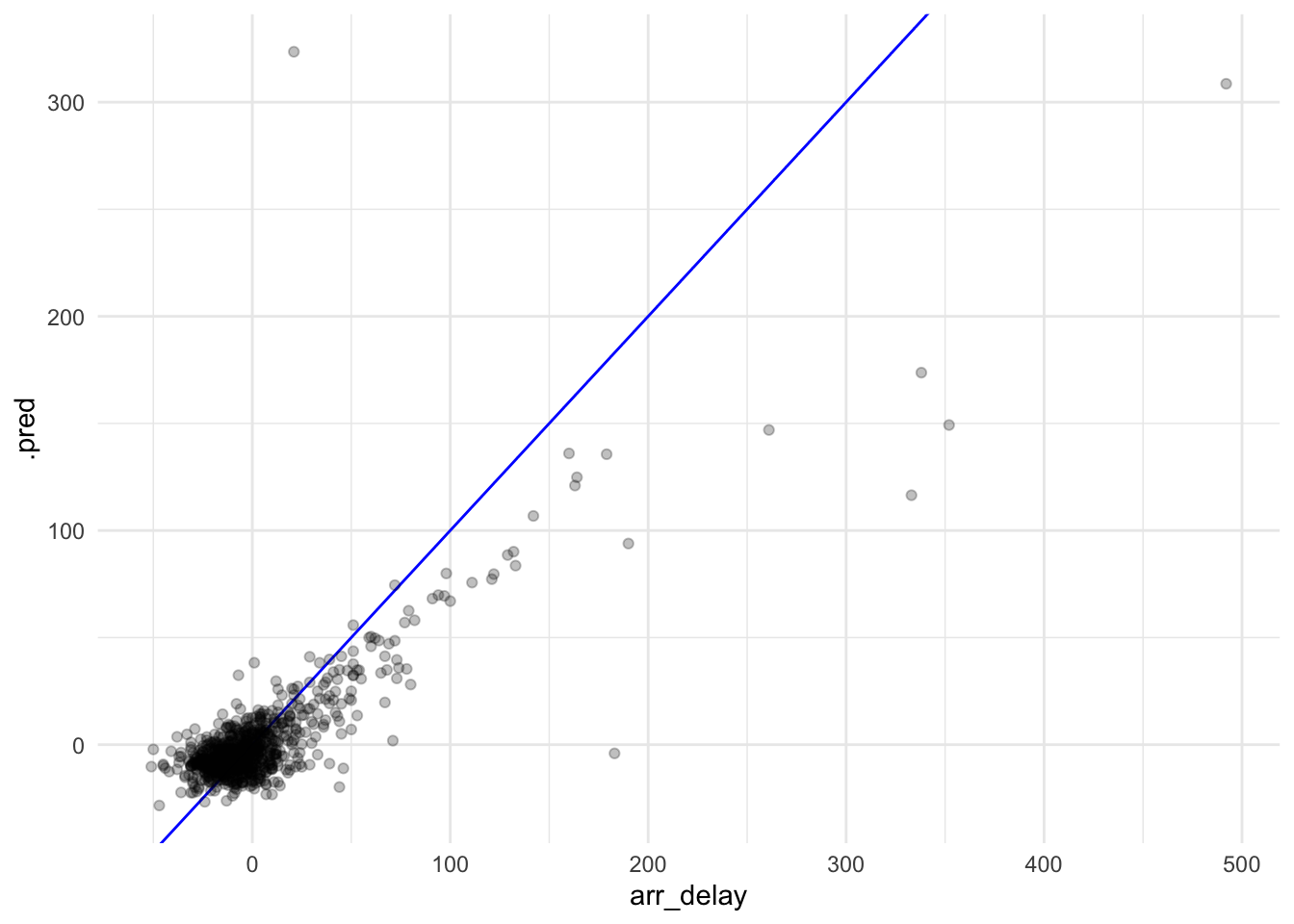

$ arr_delay <dbl> 4, -15, -12, 38, -9, -17, 5, 12, -40, 6, -7, 28, 25, -9, 180…

$ dep_delay <dbl> 9, -8, 0, -7, 3, 6, 29, -1, 2, 7, 6, 13, 34, -2, 191, 52, 9,…

$ carrier <chr> "UA", "OO", "AA", "UA", "OO", "OO", "UA", "AA", "DL", "DL", …

$ tailnum <chr> "N37502", "N198SY", "N410AN", "N77261", "N402SY", "N509SY", …

$ origin <chr> "LAX", "LAX", "LAX", "LAX", "LAX", "LAX", "LAX", "LAX", "LAX…

$ dest <chr> "KOA", "EUG", "HNL", "DEN", "FAT", "SFO", "MCO", "MIA", "OGG…

$ distance <dbl> 2504, 748, 2556, 862, 209, 337, 2218, 2342, 2486, 862, 156, …

$ time <dttm> 2022-01-01 13:15:00, 2022-01-01 14:00:00, 2022-01-01 14:45:…